Introduction

I set out on this practice based research, to look at the question of how the physiological and psychological constraints of human visual perception, through the lens of restaging and interpreting the early works of John and James Whitney.

The result of this process is the short film Navagraha, available on vimeo (and embedded below) - it is an expression of a system used to generate images in a manner similar to and inspired by John Whitney's work on his computer aided graphics process, making use of an army surplus analogue targetting computer. My system, the Harmonograph, was initially designed as a common test bed onto which various techniques, that explored the nature of time, how we capture images and create moving images, could be implemented.

However as a result of this process, it became apparent, that there was much to explore within the mechanics of that system, and within certain misreadings / inspirations of previous techniques - that whilst not directly answering the questions I had hoped to, still provide some indirect insights. Perhaps most importantly, this research, represents the beginning of what I suspect will be a long journey, looking at the many different questions, this area of research raises.

Specifically it raises an important question, about how machines which can create images or sound, are subject to rules and constraints - and that it is these rules and constraints which lead to the generation of things of interest. We then walk an interesting tightrope as we enter the virtual world of software, where choosing those constraints we apply becomes more and more important.

The following write up, looks at the creation of the Harmonograph, and how the film Navagraha was created.

John and James Whitney

John and James Whiteny were american artists, regarded as pioneers in the field of computer graphics - in the context of this research, I am primarily interested in the mechanical analog computer system John developed, seen in both his own films - most notably Catalog 1961, and his brother James' film Lapis 1966.

John Whitney worked at Lockheed during World War II, which exposed him to the work being carried out on analogue targeting computers - complex combinations of electronics, that took various inputs, and assisted any operator in targeting by predicting the likely path of a target. He realised that these would provide a way of plotting / guiding movements in a repeatable manner - thus opening up all sorts of possibilities in animation.

I don't know how many simultaneous motions can be happening at once. There must be at least five ways just to operate the shutter. The input shaft on the camera rotates at 180 rpm, which results in a photographing speed of 8 frame/s. That cycle time is constant, not variable, but we never shoot that fast. It takes about nine seconds to make one revolution. During this nine-second cycle, the tables are spinning on their own axes while simultaneously revolving around another axis while moving horizontally across the range of the camera, which may itself be turning or zooming up and down. During this operation we can have the shutter open all the time, or just at the end for a second or two, or at the beginning, or for half of the time if we want to do slit-scanning.

Whitney's sun John Jr. describing the machine in 1970 1 2.

Persistence of vision, and motion Graphics.

In order to create moving pictures, that the human brain can perceive as a credible moving image, we find ourselves constrained by specific limits. Motion is perceived when we see a sequence of images, presented in a time frame, where around a minimum of 24 frames per second is needed to create a smooth and acceptable sense of motion. For any system, computational or not, to create moving images in real time - it has to be capable of generating a new image every 41.666 milliseconds - for higher frame rates, such as 60fps as typically found in television and general computing - that drops to 16.66 milliseconds.

Putting that into context - by looking at the number of instructions a computer can carry out per second (typically a million instructions per second, the MIP) we see a development over time from the UNIVAC 1 in 1951 of 0.002MIPS, all the way up to the latest processors being able to carry out over 2 million MIPS - the landscape has most obviously changed, in terms of what can be achieved in real time 3

This fixed limit, creates a boundary around which we must make choices - when John and James first worked on their films, and the associated technology - they had little choice, but to work in a manner similar to that of stop motion animators - creating individual frames of a film, in non real time - admittedly the technology they worked on presented huge advantages in automation 12. We can see this in the time James took to create the film Yantra, by hand between 1950 and 1955, and Lapis, using the computer in about two years.

The developments in technology, mean that at this point in time, we have the opportunity to explore the techniques they used to create their films, in real time, and in an interactive manner - and it is this, that my research has looked at.

The Harmonograph

There are it seems very few specific descriptions of exactly how the technology John developed worked - it seems likely that it was a system that evolved over time, and that it was much in demand for commerical work through Johns company Motion Graphics, Inc4. This proves to be both a blessing and a curse - as it allows me to define a system which is inspired by their work - rather than an exact recreation.

There are two key elements to the Harmonograph, and the combination of these elements is what provides the way in which individual scenes were composed and generated for the film.

- A rotor system, which is primarily concerned with determining movement of objects within the system5

- Drawable objects, a wide generic class which covers anything which can be placed on a rotor, and drawn into the 3D scene

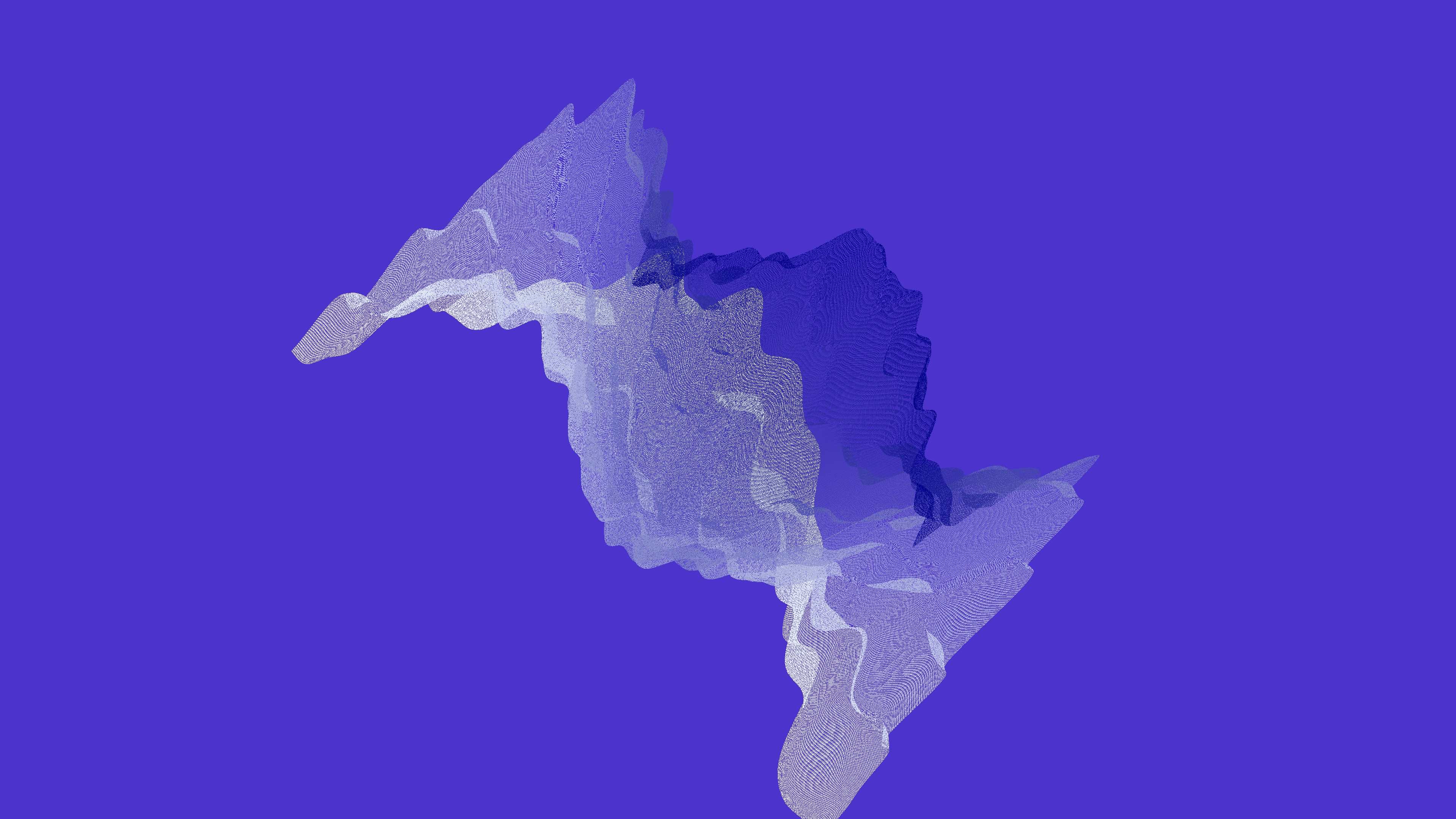

Navagraha makes specific use of a single drawable object type - the perforated mesh - a 3D object, which consists of a surface with many holes, and can have it's individual points deformed to create more interesting three dimensional shapes.

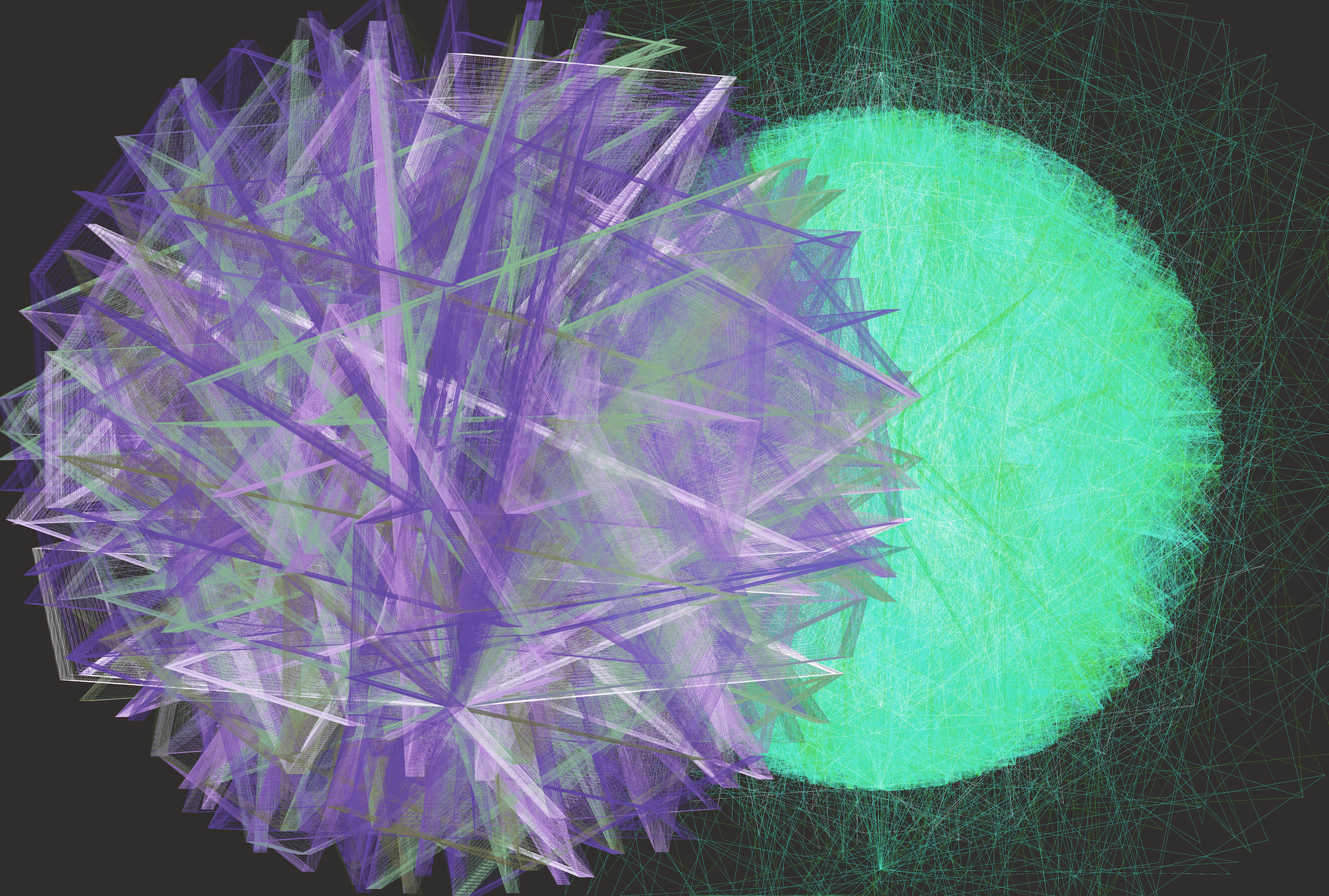

The Rotor system

A rotor is defined as a torus like object, which has a position in space, a size, and a rate of spin in the X, Y and Z axes. To this object, other objects - including further rotors can be attached using a relative position - these primarily are attached at a point on the torus diameter - though there is no reason why this constrain needs to be followed.

This allows us to create, in a three dimesnional space a movement effect simmilar to that, most children will be familiar with if they have plated with a Spirograph, a toy introduced in 1965, and still ame today, which allows the creation of patterns by allowing multiple rotations to take place through plastic templates of various rounded shapes. This is also similar to the outline images that can be drawn using Fourier Tranform6 - where the terms of a series, determine the ardius of subsequent rotations, allowing complex images to be built up from a simple set of terms.

Early experiments with defining this system - showed how it creates very compelling animations, from very simple systems. Some examples of just the rotor system from early testing are shown below - with the rotor, drawn as either torus or disc.

Drawable Objects

Whilst the rotors are objects which can be drawn into a scene - they are not designed to be the source of any imagery - for this, a generic class of drawable object was created, which could be positioned on a rotor at a give point in space on that rotor. Initial testing and development was done in threejs using spheres, and in some more advanced tests objects were given trails by capturing their position over time and updating subsequent child objects.

Examples of early tests using three.js are shown below - in this case using objects which also track position.

The description of the cards James prepared for his films Yantra and Lapis, provided the inspiration for the perforated meshes used in the system for my film Navagraha. Specifically the description of James punching holes in index cards for Yantra, to use as a template for painting the cards used in the eventual animation provoked thoughts of how using holes in objects to enhance the visual experience, and how they interact with each other.

Perforated meshes

A plane in two dimensions is divided into a smaller number of segments on a grid basis - though the use of two different processes I was able to create a more interesting shape than just a flat solid plane.

- Perforations / holes - though three different algorithms triangular, diamond and square shape perforations could be created in each mesh by defining which triangles would represent a face, and not including those which should remain a hole.

- Distortion of vertices from there regular grid like positions - each dimension could have one or more functions applied to it, to transform it - these in this stage of build include

- Noise based transformations - using Perlin noise and appropriate coefficients to add points of structured random interest

- Trigonometry based functions using Sine and Cosine transformations

- In all cases, the driving input value can be chosen from the nominal x, or y coordinates of a point being transformed, ot it's index number

(x * y) + y

This ability to distort meshes became invaluable in creating interesting visual effects which will be discussed later. Specifically in the generation of moiré effects in a single object.

Factories

The use of repetition is an extremely important aspect of computational art - compared to physical arts, it is generally a low cost activity, so it's prevalence is not surprising - and as is often the case, something done once or twice is not that interesting, but when done thousands, or more time times, attains qualities, that are often difficult to predict from a few instances.The attraction of I'm sure John's computer system, and certainly my Harmonograph is this ability to take something and quickly replicate it many times.

As a result, I created factories, which allowed individual Harmonographs, Rotors, and Meshed objects to bre created, with variations in their parameters - thus allowing the creation of much more complex scenes. This relationship between individual objects, and massed objects, was to become key in the narrative oif the film, as well as the ability to change the distribution of parameters - the last two scenes show replicated objects with primarily the same parameters - but a small difference in how noise was used to manipulate certain elements - creating a transition from a stately and graceful vision, to a more turbulent, and chaotic one as the film closes.

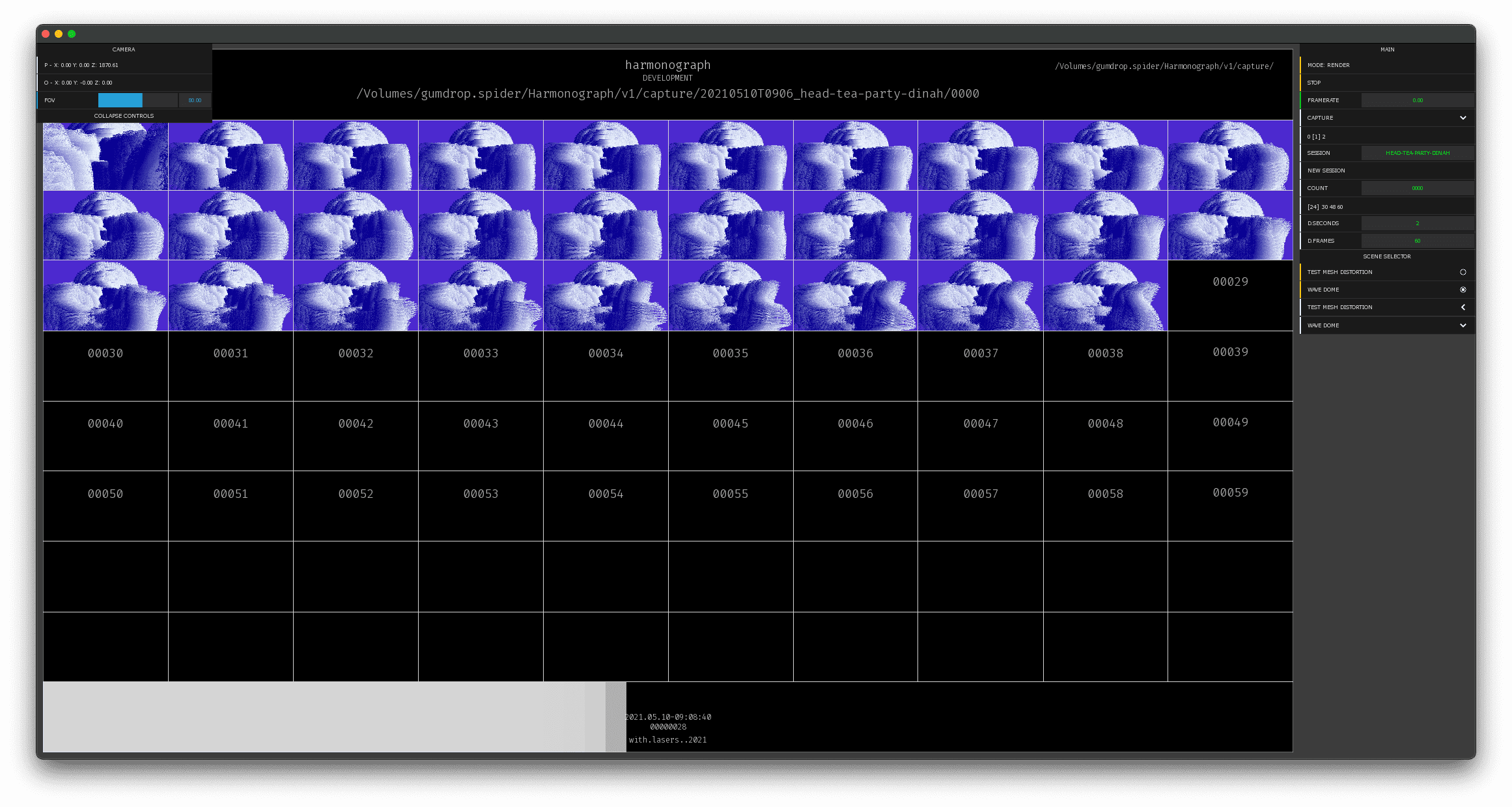

Limitations in generation of scenes

Despite all the advances made in computing I found I was still hitting limitations and hitting the perceptual speed limit of being able to generate the required image within the goal time (in this case the 41.666ms as I had chosen a frame rate of 24 frames per second for the film). There are various reasons for this, which introduce different solutions and challenges for both this and future work.

Highly complex images - irrespective of any other constraints, it is always going to be possible to find some sort of upper limit for what can be generated - in this case some of the more complex sequences, for example the closing sequences used 1,600 Harmonographs, each with two rotors and a single perforated mesh - of 400 x 400 (160,000) vertices - resulting in the need for 256 million triangles to be calculated and rendered.

Challenges of current video distribution methods. Digital video is dependant upon Coding / Decoding of compressed images (collectively known as Codecs), which vary in fidelity, file sizes generated etc. As a result of this, two specific issues were highlighted.

- The nature of the images produced by these systems, are somewhat challenging for many standard Codecs, resulting in muddy images and loss of detail - especially in the parts of the image where this detail is the most interesting part of the image. The codecs used for 4K / UHD video on platforms like Vimeo are much better at handling these types of detail, compared to those used for standard HD video - therefore this can be avoided by working at a larger image size ( in this case 3840 x 2160 pixels).

- Which presents a subsequent issue - capturing each individual frame at high enough quality without additional hardware becomes more challenging as the image size goes up, therefore even for animations which run well within the limits available for the desired framerate are - capturing images of high quality requires some sort of solution that may not work in real time.

There is one further factor, at this time, I have not specifically optimised the code in anyway, as my target at this stage was to create a short film - some non real tie rendering was acceptable - but future plans for the Harmonograph system would benefit from looking at this optimisation.

To solve these issues - I created the application in a way which allowed for the definition of specific scenes, and these can then be interacted with directly - adjust camera position, and spin rates and positions of rotors and drawable objects. In addition, once a preferred arrangement of these is found, it can be run as a render process, where each frame is created in the full time required, and saved to disk.

Self imposed Limitations

An interesting aspect of creating a virtual representation of a physical system, is that as an artist you get to choose which physical properties have the possibility of creating further interest. This is an area where working with software, is quite different to that of working with physical systems. In the physical world, we cannot ignore physical behaviours or limitations, and therefore need to design around it - a good example of this is the sculpture Paradigm7 by Conrad Shawcross installed outside the Francis Crick Institute in London, as a digital entity, there is no need to consider how the structure supports itself, and how it's weight needs to be supported by the smallest tetrahedron (approx 90cm each side) - but in the real world, this requires explicit engineering to support around 20 tonnes of Corten steel8.

We can think of physical systems as perhaps a subtractive mechanism - we need to engineer them to address limitations, or embrace those limitations. So effort is expended on removing or encouraging behaviours we find negative, or positive respectively. Whereas in software, we invariably start with the perfect representation of a system, and these behaviours need to be explicitly added - potentially at the cost of ever increasing complexity.

In creating the Harmonograph, I made concious decisions about limits I wanted to be bound by - which are summarised below

- Rotors are purely virtual, have no mass, or other physical characteristics - therefore they can overlap and interest with one another, adding collision detection would have detracted from the exploratory element of "what if this were 3D".

- Child objects are always positioned within the bounds of the parent rotor - that is, if a rotor has a size of 30, then children can only be placed on a point 30 units from the centre of that object.

- Scenes would only be rendered with a constant set of spin / position values for the objects in it - the scene must evolve though it's spatial, and rotational variances that were present at the start of the scene.

- All objects are drawn as flat meshes, with no definition of materials or lighting

Future versions of the Harmonograph may well choose to define different limitations to be bound by - and I have certainly spent time considering what the impact of implementing some form of mass, momentum and contact friction in the rotor system would be. Creating a system much more like a set of spinning solid rings.

The aesthetic qualities of the Harmonograph, and creating a short film from them

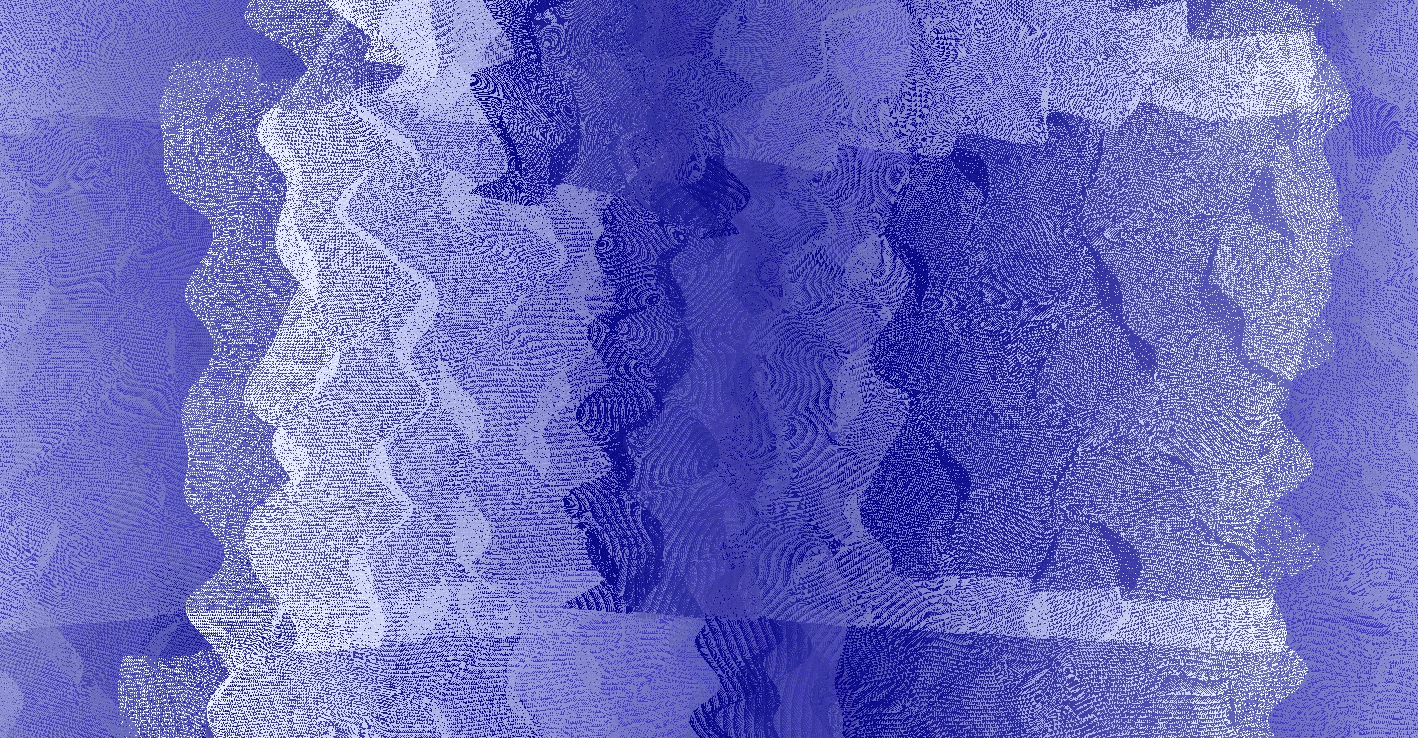

Moiré patterns - a revelation and distraction

The ability to add surfaces which had holes in them, created interesting possibilities for overlaying them on top of one another - and of course, one of the key factors in computer generated graphics, is that creating multiple instances of a single object, is (asides from performance) is generally cheap. Whilst my initial thoughts were to use these as a way of presenting mre complex colour combinations to the viewer (i.e. that you would see the background colour, or that of another mesh through the hole, or the meshes colour, where not a hole), it became apparent in early experiments using that the opportunities possible in smaller holes, and the moiré patterns generated by them were much more interesting.

As a result, my work on the artefact, became more about focussing on utilising this artefact - especially with it being, something that would in normal usage be considered a rendering flaw, and therefore has techniques developed around it, such as Mipmapps to avoid or reduce them.

The creation of these Moiré effects results in a high degree of Pareidolia - that is, the tendency to perceive meaningful images in nebulous visual stimuli - often seeing faces in objects such as clouds, or other abstract collections of objects (a perhaps unsurprising result, given that research has shown we use different areas of the brain purely for facial recognition, and one can presume this has been a strong evolutionary survival trait, in a species that requires a high level of care in it's early years).

It is particularly noticeable in the distorted meshes that use Perlin noise that faces become quickly visible - especially at the edges of the mesh, where the curves described by the noise functions very quickly resolve to facial features in profile, this curve an eyelid, the next one a nose, and the next the lips. This seems epscially strong when the forward edge, and rear edge are in close proximity, especially when the darker edge suggests a hairline.

This lends itself perfectly to trying to create an abstract film, which can become a trigger for the viewers own thought processes, and allow the mind to wander to whatever images are suggested from the sequences shown. I have already refereed to the work of Conrad Shawcross, and this is also in reference to his use of moiré like patterns in some of his work. It is again illuminating to consider the differences between a physical work and a virtual one - a physical demonstration of a moiré pattern, requires the viewer to consciously move around the structure, finding new and interesting patterns to view, and being rewarded by the visual changes that can be seen in that process. With a virtual work such as a film, the view is static, and must see what we present to them - therefore we must create these interesting opportunities for them - and the combination of three dimensional rotations and these perforated meshes creates an ideal opportunity to present these effects to the viewer.

We can see, that not unsurprisingly these effects were also explored by the Whitney's this can be seen in the film Catalog, around the 2:24 mark.9

Colour and depth

Typically when creating a something in three dimensional graphics, consideration of materials, and lighting become essential components - part of a chain of ever increasing realism, demanded by the film and gaming industries. Despite this proscribed model, there exists plenty of room to carry out less realistic, but potentially more aesthetically interesting renders.

For Navagraha, I decided upon the following constraints from a material, colour, lighting - and therefore illusion (or lack of illusion of depth)

- A simple colour scheme, based on a background colour and two foreground colours

- Vertex colouring of perforated meshes - all the meshes used the two foreground colours, with an interpolation between them for each vertex, transitioning from dark blue, to a blueish white.

- No material, and no lighting - so surfaces are rendered purely as the colour designated by the vertex Colour

I find this approach allows my work to inhabit a liminal world, between being three dimensional in origin, but as it lacks many of the depth cues for us to perceive as three dimensional, is seen in a much more two dimensional way. This is a key effect in a previous work, Plagioclase, where a three dimensional model is used to paint shapes onto the views two dimensional plane of view.

Soundtrack

The focus of this research was on the visual element - however the choice to make a short film, raised the question of should the film have sound - and if so what should it be. The scenes were setup to be visually interesting in their own right, as previously discussed, using a process of establishing initial sets of variables that created interesting and evolving effects.

As much of the development was done listening to music, I had already viewed the majority of scenes with some kind of soundtrack - and it became apparent that, an interesting interplay arose from the visuals, which were not necessarily in step with whatever was playing. Much like the Pareidolia effect already discussed, a form of auditory meaning emerges with linking of key musical features to visual features - it is not perhaps surprising that in images of such complexity it is possible to always find a degree of satisfying synchronicity.

My future plans for the Harmongraph, is to directly link them into an audio generation process, making use of spatial sound relative to the speeds of rotation and other parameters - thus creating something more like a playable audio-visual instrument, however this needed to be out of scope at this stage, as was making my own soundtrack. Therefore the music used, is licensed from Adobe Stock, and I think I found the perfect piece - called Theta to Delta Meditation by Joseph Beg10 from the album Headphones only. The inspiration for a film, is of course the work by James Whitney in Lapis - and the quiet meditative state the combination of images and Sitar provide in that film. I wanted to find a similar inner space.

Hailing from a deeply rooted longing to explore his inner world through sound he doesn’t consider it a choice as much a natural outcome of things. Yoga and meditation are fundamental tools in his life, including his work as a physiotherapist, and ultimately he sees his music as an extension of the practice of these ancient teachings. His work combines traditional meditative tunes with vaporwave aesthetics creating a universe of sound and resonance that’s infinite yet precise in execution.11

Composition and narrative

Having decided upon a soundtrack - it was then possible to consider how the scenes were to be assembled into a final form - I wanted to create a framework of a narratove, one which could work as a basis for the viewer to create their own internal world and or narrative on top of - to gently guide them, rather than railroad them into a specific state.

This starts from the very beginning, using a simple colour scheme throughout, and having a long opening sequence, in which the viewer can imerse themselves in the blue / purple background cleansing any previous images and thoughts from their mind. Generally I looked to have each scene build on the previous ones complexity, peaking at around 12 minutes, before collapsing into a smaller version of the final scene.

Two interludes are created, with a single Harmonograph, allowing an appreciation of the complexity arising from just a single distorted mesh. There is at approximately 04:30 into the film, a key change in the soundtrack - which allowed a visual transition from more straightforward scenes (primarily flat planes, interfering where they overlap in rotation) to much more complex scenes, with multiple Harmonographs with distorted planes, with a much high degree of moiré pattern generation, both through distortion of the planes, and overlapping of systems.

Conclusions

I'm extremely pleased with the film I have created - the decision to make this the artefact for this stage of the research, whilst perhaps obvious was not an easy one to make. It required me think differently about how such a a work as this was presented, and moving away from something interactive, or permanently having it's driving parameters changed was a refreshing way of looking at how to develop a system in an interesting way. As I created more complex scenes, it became apparent that it was often possible to create something not quite intended, and that this would open up new possibilities for that scene or derivative ones from it.

It is also satisfying to feel like I have barely scratched the surface of the possibilities this system offers, and I intend to take this work forward into my work over the summer - looking at alternative ways to use and develop it.

References

Creative Computer Graphics, Annabel Jankel, Rocky Morton - Cambridge University Press - 1984 - ISBN: 0-521-26251-8

Mainframe Experimentalism: Early Computing and the foundations of the digital arts, ed. Hannah B Higgins and Douglas Kahn, University of California Press, ISBN: 978-0-520-26838-8

Vector Synthesis: A Media Archaeological Investigation into Sound Modulated Light**, Derek Holzer, Publisher Derek Holzer ISBN: 978-952-94-2221-8

Evolutionary Art and Computers, Stephen Todd and William Latham - Academic Press - 1992 - ISBN: 0-120437185-X

Islamic Patterns, An analytical and cosmological approach - Keith Critchlow - Thames & Hudson - ISBN 978-0-500-27071-4

- "John Whitney", Wikepedia https://en.wikipedia.org/wiki/JohnWhitney(animator)↩

- "William Moritz on James Whitney's Yantra and Lapis", Center for Visual Music http://www.centerforvisualmusic.org/WMyantra.htm↩

- "Instructions per Second", Wikepedia https://en.wikipedia.org/wiki/Instructions_per_second↩

- "An Afternoon with John Whitney" https://www.youtube.com/watch?v=cP5Mj6ZvZJc↩

- "Digital Harmony: The Life of John Whitney, Computer Animation Pioneer" https://www.awn.com/mag/issue2.5/2.5pages/2.5moritzwhitney.html↩

- Motion Control: An Overview, John Whitney - American Cinematographer, December 1981: 62, 12, Arts Premium Collection, pg 1220↩

- But what is the Fourier Transform? A visual introduction. 3 Blue 1 Brown, YouTube https://www.youtube.com/watch?v=spUNpyF58BY↩

- Pardigm 2016, Conrad Shawcross http://conradshawcross.com/blog/project/paradigm-2016/↩

- Cloaks, Optics and Surface Disruption | Conrad Shawcross | TEDxUAL, You Tube https://www.youtube.com/watch?v=DqOl97cfP3c↩

- John Whitney, Catalog 1961, YouTube https://youtu.be/TbV7loKp69s?t=145↩

- Theta to Delta Mediation (Short version) https://www.epidemicsound.com/track/gVUZkxK7Yo/↩

- Joseph Beg, Epidemic Sound https://www.epidemicsound.com/artists/joseph-beg/↩